(法新社巴黎20日電) 美國工程師製作出1台床頭櫃大小的10億畫素(gigapixel)相機原型AWARE-2,單張照片解析度是目前百萬畫素相機的1000倍。開發人員表示,AWARE-2不是世界第1台10億畫素相機,但卻是同類相機中最小、最快速的。它為改善機場安全、軍事監視甚至網路運動賽事報導開拓新局。畫素是數位影像的1個光點,聚集後形成圖片。

目前相機拍出的照片是以百萬畫素(megapixel)計算,一般消費性相機畫素多在800到4000萬之間。今日多數的10億畫素圖片是以多張百萬畫素圖片數位合成。

計畫成員布雷迪(David Brady)告訴法新社:「我們的相機不到1/10秒就可記錄1張10億畫素照片。」10億畫素照相技術可捕捉人類肉眼無法看見的細節,拍完再放大觀察也依舊清晰。(譯者:中央社徐嘉偉)

BTW,那80 Gigapixel 的畫素的照片是什麼樣??!

http://www.youtube.com/watch?v=GgEJif2rl0M&feature=related

來實際體會一下吧!

http://www.360cities.net/london-photo-en.html?v=86.7%2C7.9%2C17

最快最小10億像素相機誕生 清晰度人眼5倍 7年後民用

香港《明報》報導,美國杜克大學在國防部資助下,開發出全球最快和最小的「超級數碼相機」,其拍攝的照片解像度達到 10 億像素,比現時一般數碼相機清晰 100 倍,按一次快門就可拍攝 120 度的大範圍景物,估計美方將應用於軍事監察,包括安裝在無人偵察機上查探外國軍情。大學方面更計劃將相機微型化,希望最快7年內將它變成手持大小,打入尋常百姓家。

《華爾街日報》消息,一般相機像素愈大,鏡頭體積亦會相應加大,例如天文學家用以觀測宇宙的 10 億像素設備,其鏡片動輒直徑以米計。但杜克大學最新的 10 億像素 Aware-2 相機,體積只有 75 × 50 × 50 厘米,相當於兩部微波爐,較天文學家所用的 10 億像素級觀測儀器小一半以上,重量則約 100 磅。

研究員解釋,相機體積大幅縮小,源於相機並非採用傳統的單一鏡頭,而是在一直徑 6 厘米的球形透鏡周圍,裝設 98 個各配備 1400 萬像素感應器的微型相機,各相機共用球形透鏡作拍攝,並由感應器修正影像。

照片的清晰度,相當於視力正常人類肉眼的 5 倍,換言之肉眼距離 20 呎可看清的景物,在距離 100 呎的位置仍可清楚顯示。研究員稱,相機可輕易放大 800 米外的微細景物,例如汽車車牌及道路指示牌等,方便大範圍觀察。使用者亦毋須再考慮對焦問題,因為照片由近百部相機拍攝,每部機對個別範圍作對焦。

相機可拍攝出橫向 120 度,縱向 50 度的廣角照片,其拍攝範圍遠大於一般天文界使用的 10 億像素級高清相機。以夏威夷大學天文 Pan-STARRS 望遠鏡的 4 個用以觀察近地天體的 14 億像素攝影機為例,每個只能拍攝 3 度的天空。杜克大學的光學工程師布雷迪 (David Brady) 形容,以傳統數碼相機拍攝,「就像透過飲筒看世界」,而 Aware-2 「就像救火喉」。

另外,超級相機每次快門只要 1/10 秒,因此毋須憂慮拍攝時間差異會令相中的移動對象移位。各鏡頭的數據在拍攝後會合成一張照片,並儲存於硬件,整個過程需時18秒。

Aware-2 目前只能拍攝黑白照,但科學家可望於今年底製成 100 億像素的超級彩色相機,每秒拍攝 10 張照片,接近拍攝影片速度。當前挑戰是將相機縮小,並減少耗電。研究人員預期明年可望推出商業用版本,傳媒及研究員將會是潛在用戶,估計最快 7 年後製成民用手提版本。目前製造一部 Aware-2 成本約 10 萬美元,未來量產時可望將成本降至 1000 美元。

這超級相機計劃由美國國防部高等研究計劃局撥款 2500 萬美元進行,期望發展出輕巧高清相機,應用於空中及地面自動監察系統、在國內外進行監控,以及加強機場等設施的保安。

Below Reference: GearBurn

AWARE2計畫英文版(參考Duke)

AWARE2 Multiscale Gigapixel Camera

Introduction

This program is focused on building wide-field, video-rate, gigapixel cameras in small, low-cost form factors. Traditional monolithic lens designs, must increase f/# and lens complexity and reduce field of view as image scale increases. In addition, traditional electronic architectures are not designed for highly parallel streaming and analysis of large scale images. The AWARE Wide field of view project addresse these challenges using multiscale designs that combine a monocentric objective lens with arrays of secondary microcameras.

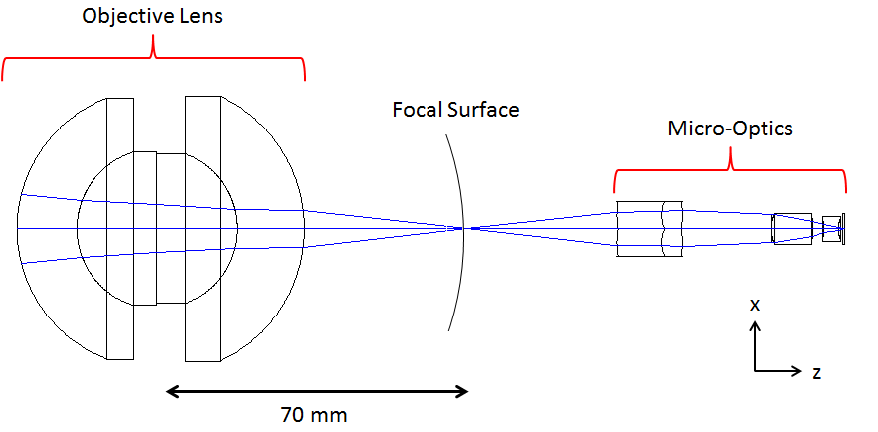

The optical design explored here utilizes a multiscale design in conjunction with a monocentric objective lens [2] to achieve near diffraction limited performance throughout the field. A monocentric objective enables the use of identical secondary systems (referred to as microcameras) greatly simplifying design and manufacturing. Following the multiscale lens design methodology, the field-of-view (FOV) is increased by arraying microcameras along the focal surface of the objective. In practice, the FOV is limited by the physical housing. This yields a much more linear cost and volume versus FOV. Additionally, each microcamera operates independently, offering much more flexibility in image capture, exposure, and focus parameters. A basic architecture is shown below, producing a 1.0 gigapixel image based on 98 micro-optics covering a 120 by 40 degree FOV.

Design Methodology

Optics

Multiscale lens design is an attempt at severing the inherent connection between geometric aberrations and aperture size that plagues traditional lenses [1]. By taking advantage of the superior imaging capabilities of small scale optics, a multiscale lens can effectively increase its field-of-view and image size by simply arraying additional optical elements, similar to a lens array. The resulting partial images can then be stitched during post-processing to create a single image of a large field. An array of identical microcameras is used to synthesize a curved focal plane, with micro-optics to locally flatten the field onto a standard detector.

It is increasingly difficult to achieve diffraction-limited performance in an optical instrument as the entrance aperture increases in size. Scaling the size of an optical system to gigapixels also scales the optical path difference errors and the resulting aberrations [9]. Because of this, larger instruments require more surfaces and elements to produce diffraction-limited performance. Multiscale designs [1] are a means of combating this escalating complexity. Rather than forming an image with a single monolithic lens system, multiscale designs divide the imaging task between an objective lens and a multitude of smaller micro-optics. The objective lens is a precise but simple lens that produces an imperfect image with known aberrations. Each micro-optic camera relays a portion of the microcamera image onto its respective sensor correcting for the objective aberrations and forms a diffraction-limited image. Because there are typically hundreds or thousands of microcameras per objective, the microcamera optics are much smaller and therefore easier and cheaper to fabricate. The scale of the microcameras are typically those of plastic molded lenses, enabling mass production of complex aspherical shapes and therefore minimizing the number of elements. An example optical layout is modeled in the figure below.

Electronics

The electronics subsystem reflects the multiscale optical design and has been developed to scale to an arbitrary number of microcameras. The focus and exposure parameters of each camera are independently controlled and the communications architecture optimized to minimize the amount of transmitted data. The electronics architecture is designed to support multiple simultaneous users and is able to scale the output bandwidth depending on application requirements.

In the current implementation, each microcamera includes a 14 megapixel focal plane, focus mechanism, and a HiSpi interface for data transmission. An FPGA-based camera control module provides an interface to provide local processing and data management. The control modules communicate over ethernet to an external rendering computer. Each module connects to two microcameras and is used to sync image collection, scale images based on system requirements, and implement basic exposure and focus capabilities for the microcameras.

Image formation

The image formation process generates a seamless image from the microcameras in the array. Since each camera operates independently, this process must account for alignment, rotation, illumination discrepancies between the microcameras. To approach real-time compositing, a forward model based on the multiscale optical design is used to map individual image pixels into a global coordinate space. This allows display scale images to be stitched multiple frames per second independent of model corrections, which can happen at a significantly slower rate.

The current image formation process supports two functional modes of operation. In the "Live-View" mode, the camera generates a single display-scale image stream by binning information at sensor level to minimize the transmission bandwidth and then performing GPU based compositing on a display computer. This mode allows users to interactively explore events in the scene in realtime. The snapshot mode captures a full dataset in 14 seconds and stores the information for future rendering and analysis. This mode is used for capturing still images such as those presented on the AWARE website.

Flexibility

A major advantage of this design is that it can be scaled. Except for slightly different surface curvatures, the same microcamera design suffices for 2, 10, and 40 gigagpixel systems. FOV is also strictly a matter of adding more cameras, with no change in the objective lens or micro-optic design.

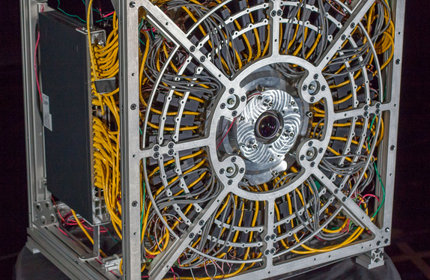

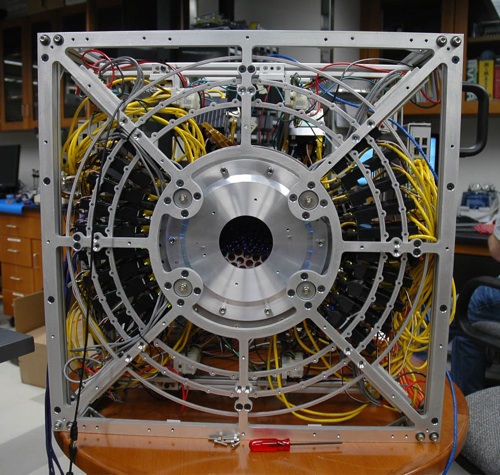

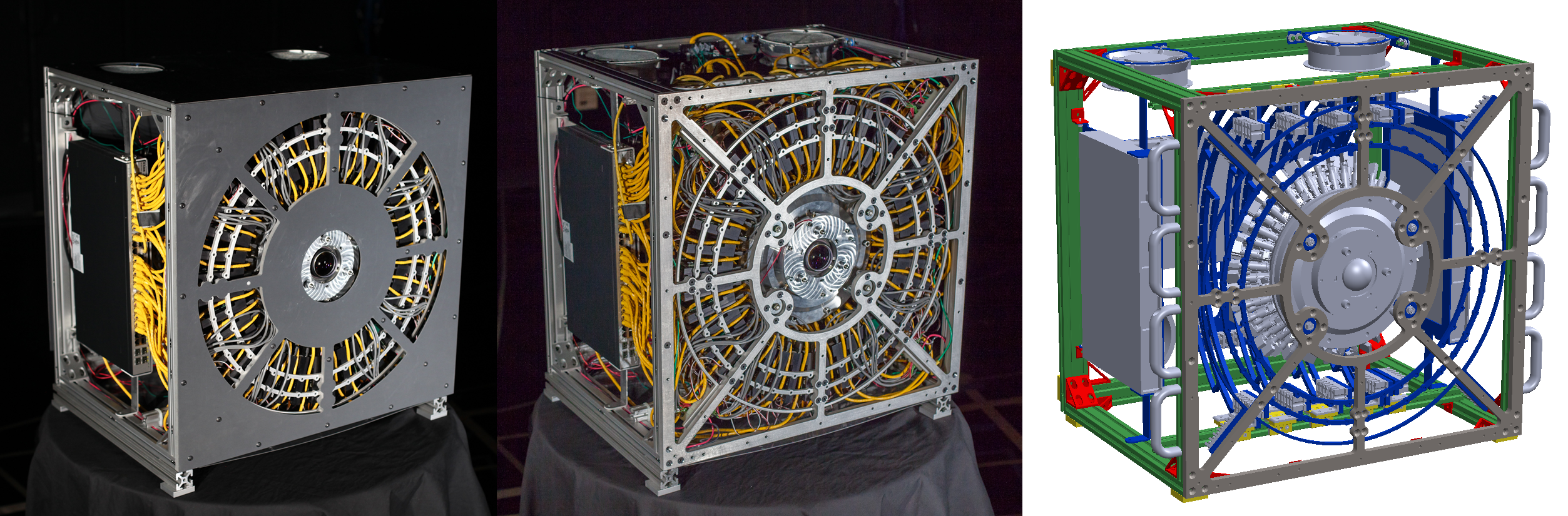

Current Prototype System

Currently, a two-gigapixel prototype camera has been built and is shown below. This system is capable of a 120 degree circular FOV with 226 microcameras, 38 microradian FOV for a single pixel, and an effective f-number of 2.17. Each microcamera operates at 10 fps at full resolution. The optical volume is about 8 liters and the total enclosure is about 300 liters. The optical track length from the first surface of the objective to the focal plane is 188 mm. Specifications of camera one can be found here: AWARE2 camera 1, and Specifications of camera two can be found here: AWARE2 camera 2. Example images taken by both systems can be found here: AWARE website

Construction

AWARE-2 was constructed by an academic/industrial consortium with significant contributions from more than 50 graduate students, researchers and engineers. Duke University is the lead institution and led the design and manufacturing team. The lens design team is led by Dan Marks at Duke and included lens designers from the University of California San Diego (Joe Ford and Eric Tremblay) and from Rochester Photonics and Moondog Optics. The electronic architecture team is led by Ron Stack at Distant Focus Corporation. The University of Arizona team lead by Mike Gehm is responsible for image formation, the image data pipeline and stitching. Raytheon Corporation is responsible for applications development. Aptina is our focal plane supplier and is supplying embedded processing components. Altera supplies field programable gate arrays. The construction process is illustrated in timelapse video below.

Future Systems

The AWARE-10 5-10 gigapixel camera is in production and will be on-line later in 2012. Significant improvements have been made to the optics, electronics, and integration of the camera. Some are described here: Camera Evolution. The goal of this DARPA project is to design a long-term production camera that is highly scalable from sub-gigapixel to tens-of-gigapixels. Deployment of the system is envisioned for military, commercial, and civilian applications.

People and Collaborators

This project is a collaboration with Duke University, University of Arizona, University of California San Diego, Aptina, Raytheon, RPC Photonics, and Distant Focus Corporation. The AWARE project is led at DARPA by Dr. Nibir Dhar. The seeds of AWARE began at DARPA through the integrated sensing and processing model developed by Dr. Dennis Healy [12].

留言列表

留言列表